Verification Metrics for Simulation & Formal, & Static Sign-Off

DAC 2019 panel presentation by Prakash Narain of Real Intent (edited transcript)

Presentation Overview

Real Intent president and CEO Prakash Narain of presents on how static verification compares with dynamic verification, and how simulation, formal, and static sign-off each rate in terms of the 3 key verification metrics.

Comparing Static Verification vs. Dynamic Verification

First of all, let’s discuss static vs dynamic verification.

- We’re familiar with static methods — they utilize search and analysis techniques to check for design failures under all possible test cases. Examples would be CDC (clock domain crossing), RDC (reset domain crossing), and formal.

- On the other hand, dynamic methods compute design behavior dynamically, and then check the computed behavior for failures under user-specified test cases. Examples of dynamic methods would be simulation and emulation.

So, what are some of the characteristics of the various design methodologies?

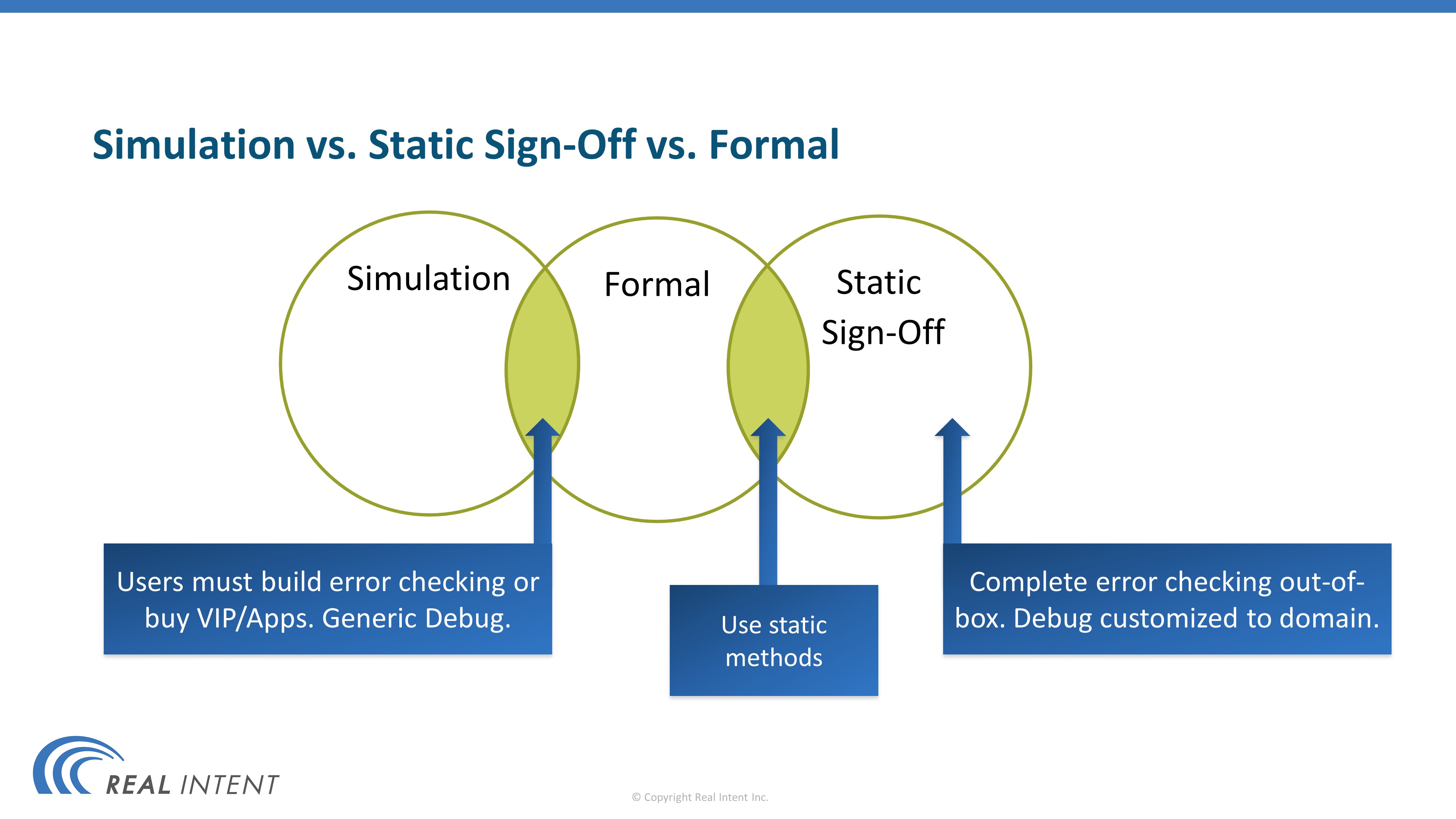

Static Sign-Off, Formal & Simulation– Overlap & Differences

I want to talk about three methodologies; simulation, static sign-off, and formal.

If you look at formal and simulation, they are both generic applications. That means the user must build error checking. To facilitate the error checking, they can buy VIPs or apps; but it’s the user’s responsibility to build the error checking.

And because these are generic products, the debug is also generic.

Formal and static sign-off are both static methods; however, static sign-off includes complete and customized error checking in the context of the tool; as a result, the debugging is also customized to the application at hand.

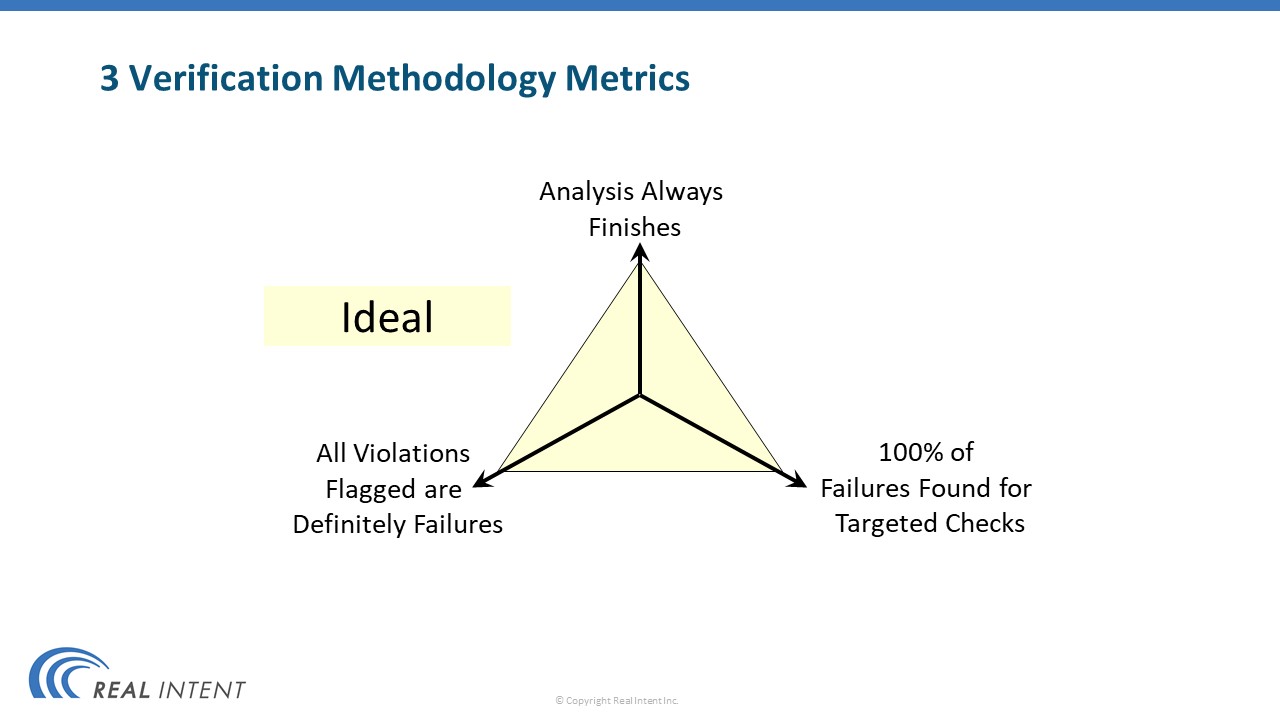

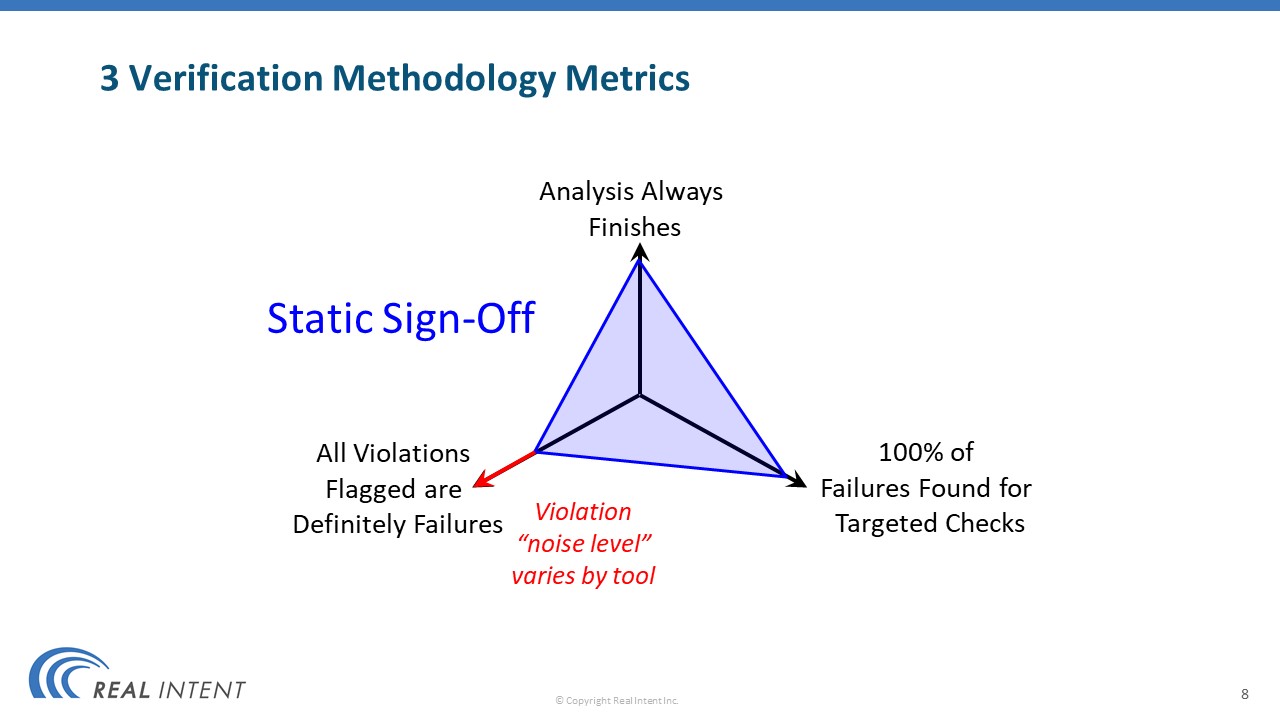

Three Key Functional Verification Metrics

Here’s a very simplified model of how we can evaluate the merits of each of these three approaches.

- The first metric is that the analysis always finishes, which means that the method completes in a practical time frame.

- The metric on the left says that all the violations flagged by the analysis are definitely design failures.

- The third metric says that, for the targeted checks, 100% of the failures are found. This is another way of saying that you can prove the absence of any errors in the design.

These are the three dimensions on which we can evaluate the various methodologies.

The ideal scenario is a verification methodology that rates very high on each of these metrics.

Simulation, Formal & Static Sign-Off Performance Against Verification Metrics

There is no ideal methodology. So, let’s look at the characteristics of the various methodologies.

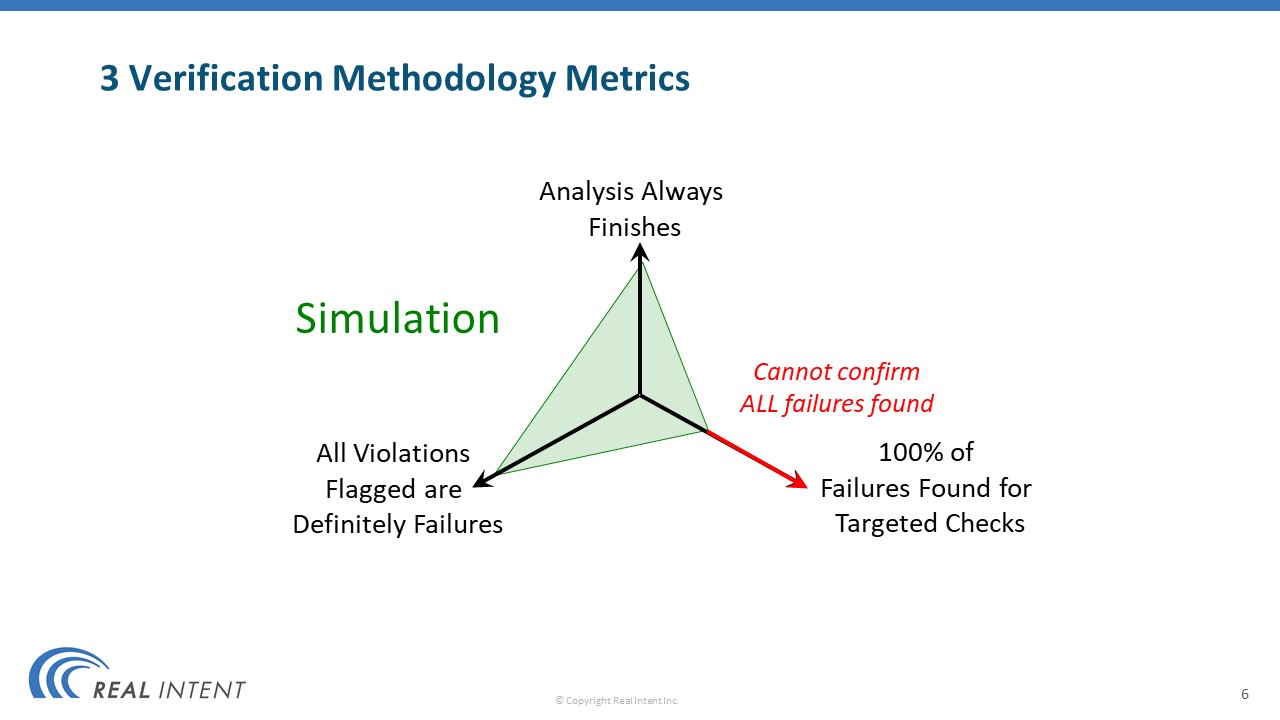

Simulation

Let’s look at simulation first.

We know that simulation finishes. When you create a test bench, you have some idea of how much time it will take for simulation to run, and that it will finish.

We also know that for simulation the failures that are flagged are definite failures.

However, simulation is the weakest on the third metric; it cannot confirm that 100% of failures have been detected.

The weakness of a methodology is where the engineering effort must be spent to overcome that deficiency.

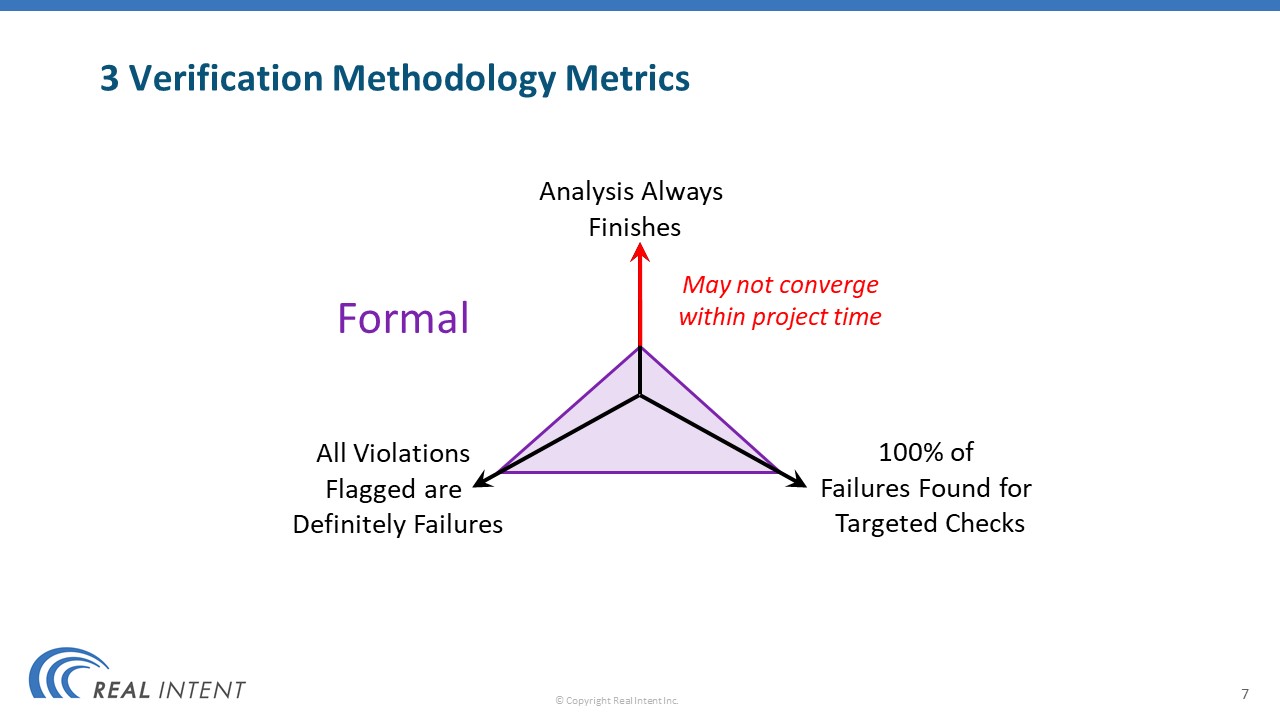

Formal

Next, let’s look at the characteristics of formal methods.

We know that in formal analysis, if a failure is flagged, it’s a definite failure. At the same time formal analysis is very capable of finding 100% of the failures; that is to say, it is capable of proving correctness.

But it lacks on the third dimension, which is the performance dimension; the formal analysis may not finish in the project timeline.

As a result, all the engineering effort goes into figuring out how to improve the completion rate for the formal analysis. This is the weakest dimension for formal analysis.

Static Sign-Off

If you look at static sign-off, it’s a very different kind of a methodology.

Static sign-off analysis finishes. Static sign-off also finds 100% of the failures that are targeted by the checks.

However, static sign-off does not find definite failures — it finds potential failures, or the so-called “noise”. The noise level for the static sign-off tools will vary from tool to tool; the accuracy will also vary from product to product.

It makes a trade-off in the third dimension. The idea is that if you can iterate and clean out all potential violations that are reported then you have sign-off.

This is the characterization of the three methodologies.