Samsung: Static Verification Sign-Off across Multiple Domains

DAC 2019 panel presentation by Brian Choi of Samsung (edited transcript)

Case Study Overview

Brian Choi of Samsung presented a case study on Samsung’s RTL linting and clock domain crossing sign-off methodology.

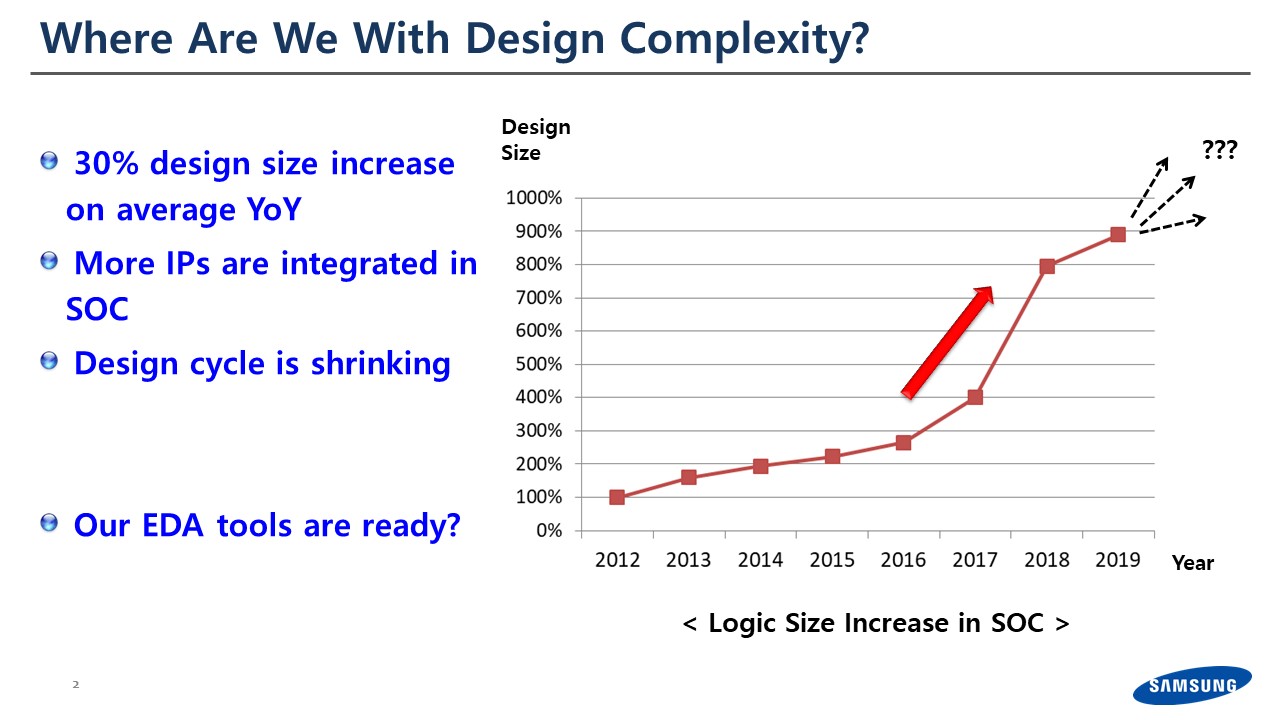

Design Challenges: Design size & Increasing IP integration

I’d like to share some of the challenges and some of the experiences that I’ve had at Samsung and previous companies, especially for static verification.

Every year if you look at the SoC, or any other silicon we’re actually increasing our design complexity and design size about 30% every year. This is true at Samsung as well. I’m in charge of the verification for the SoC which goes to our smartphone. The number of IP we’re integrating into a single silicon is close to one thousand.

Also, in the mobile market, our customers are always asking for a shorter time-to-market. We always have these challenges. So, let me talk about a couple of things.

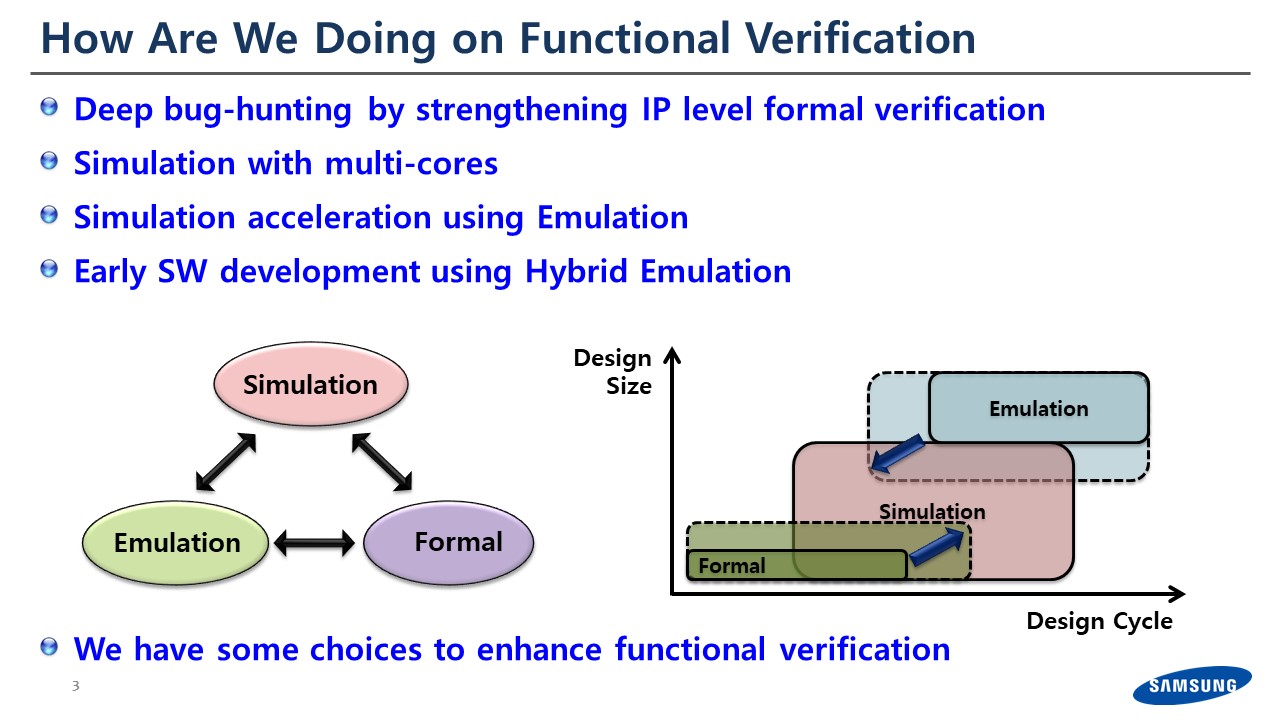

Simulation, Formal, and Emulation

Before I jump into static verification: we spend the majority of time in functional verification — this is a mixture of dynamic and static verification. In this area we mainly have three verification engines. We have simulation, formal as static verification, and emulation.

Given the design cycle, we start using simulation and formal for the IP-level verification. And when the design size gets bigger and eventually gets to the SoC or full-chip level, we use simulation and emulation.

Sometimes, if we need to run very long scenarios, we end up mixing the virtual platform and emulation. For functional verification we have multiple choices to cover various behaviors.

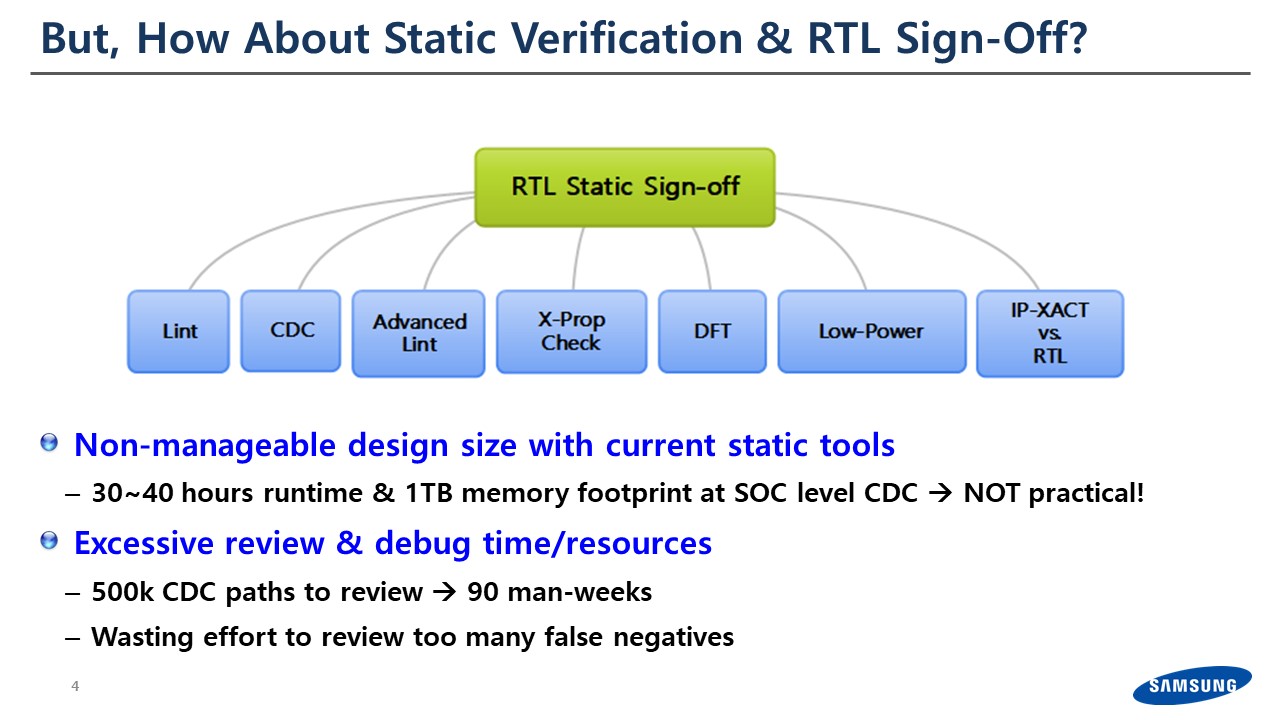

Static Verification & RTL Sign-Off

For static verification, especially RTL sign-off, I’ve listed the most popular static verification tools. The previous speakers talked about lint and CDC and other things. Let me just give you one example for the CDC, since I’ve been working on CDC with Prakash [Prakash Narain, Real Intent CEO] for a while.

We used to have ~30-40 hours of runtime for the CDC analysis for just one iteration; and usually the memory footprint went to one terabyte. In our short design cycle, this is reaching the limit.

The previous discussion mentioned we have noise in static verification. With CDC, I call these “false negatives”. Usually we had to review all of them no matter how much noise we had. We didn’t know if it was a false or a true negative, so in our design cycle we ended up spending about 90 engineering-weeks. This is a pretty long period for review.

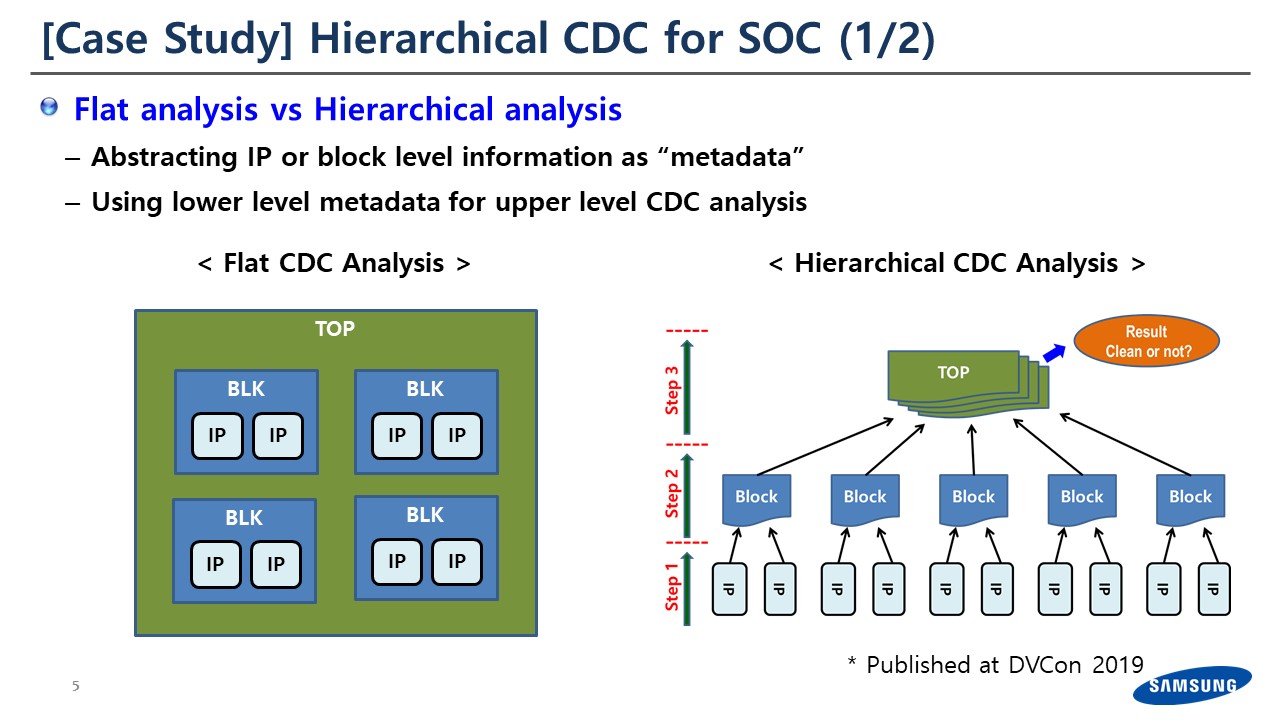

Divide & Conquer Approach

We did a case study. We previously did flat CDC analysis; basically, we would flatten the entire design and then analyze the CDC at the flat level. Although we had the IP-level and then cluster block level information in it and tried to reuse it, but basically we were using flat-level CDC until last year.

The divide and conquer solution that we adopted is fairly simple.

We abstracted the IP-level or the block-level information as metadata, and then do the high-level CDC analysis based on this metadata.

Results

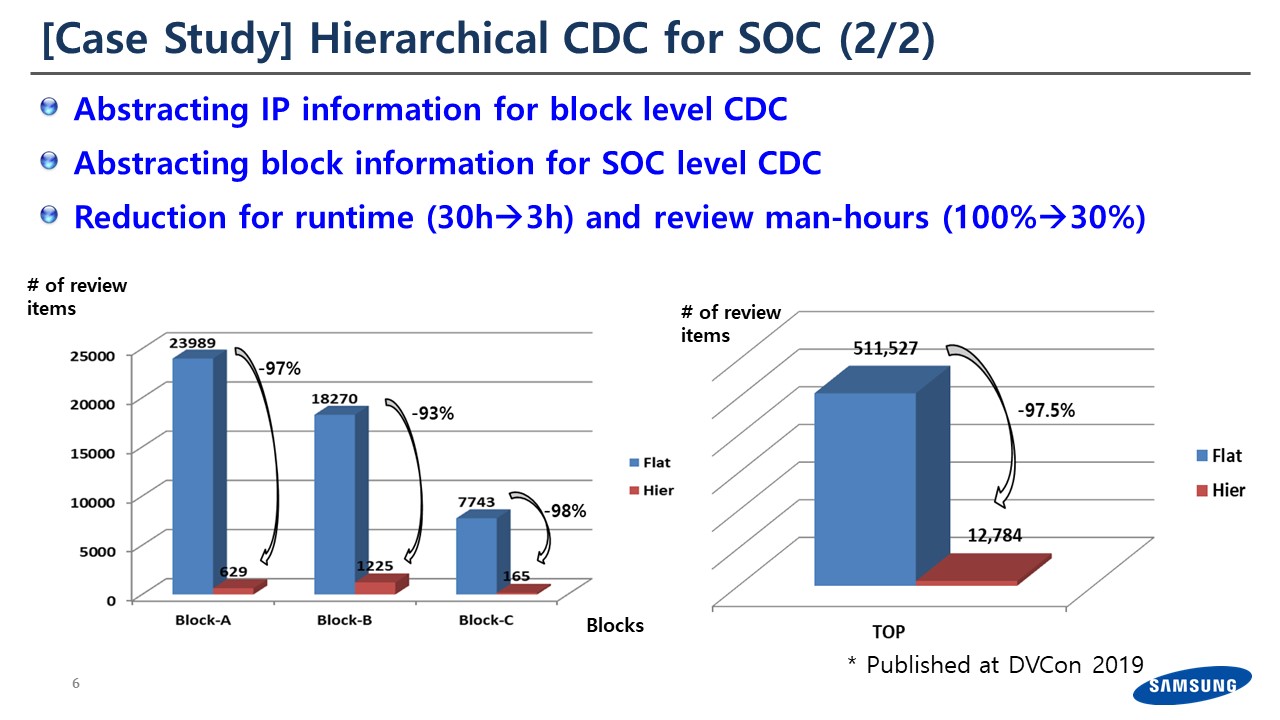

The result is quite amazing. Right now, we have two or three layers of CDC analysis. We abstract the IP-level and use it for one level higher, which is block-level CDC. And then on top of that, we’re actually doing full chip level CDC based on the block-level CDC information.

- The runtime is now 10x faster. We used to spend 30 to 40 hours for the CDC run. We were able to reduce this to 3 hours; so, we can now have multiple iterations per day.

- Another important factor: If you remember I was saying we spent about 90-man weeks to review all the CDC results; we were able to reduce this all the way to 30 percent.

By doing this we’re able to sign-off our SoC in a very short period of time.

Conclusion

Are we done with all of the work in this verification area? Actually, we have a lot of room to improve.

- I didn’t talk about the formal verification; but if you look at anybody who worked on the formal verification, we had a very good improvement in the last ten years and then formal verification became one of the major verification engines. But if you look at what size formal can handle, usually it is about one to ten million gates. Right now, our SoCs we are close to one billion gates or more.

- We still spend a lot of time on debug; and if you look at the many other static tools, we’re actually reviewing a lot of the noise and false negatives. So, I’m hoping that we have a better way of reviewing all of them.At DAC and at other conferences, we talk about machine learning a lot. To me it makes a lot of sense, because if you look at the verification process, it generates a lot of data. We put a lot of people and knowledge in, to debug and review it. Somehow if we can collect all the information that we’re putting together, it could help us to reduce our review time as well.

- Something that I’d like to pose. Usually, for functional verification, you can have multiple choices for verification engines. But for static, we’re rely purely on our generic compute servers. I’m a fan of emulation and would like to use it for other purposes, not just for simulation.

These are the areas that we’re looking at in our company, and we’ve tried to reduce our turnaround time the last couple of years. Thank you.